Toggle navigation

Do you like to talk to voice assistants on your phone?

Are you a fan of Siri, Alexa, or Cortana setting up an alarm, calling your pals, searching the internet for you, arrange a meeting, or play songs?

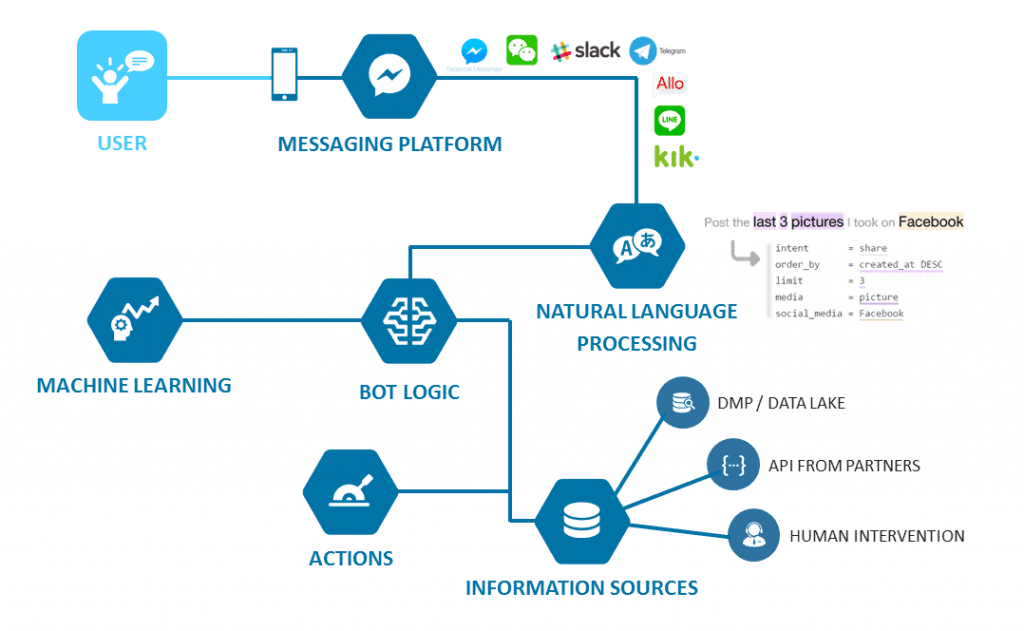

Many people agree that chatbot machine learning prepares the best bots that are useful in general and routine tasks. Moreover, since live agents aren’t available all the time, these conversational agents can take up the lead and chat with people and perform all the actions you want them to.

If you’ve heard about chatbots and how they work, then you probably know about machine learning as well. Quite precisely, machine learning is an integral part of what makes chatbot function 24/7/365. There is no wonder that machine learning is the future of chatbot.

But, the real question is, do you know anything about chatbot machine learning?

A chatbot developed using machine learning algorithms is called chatbot machine learning. In such a case, a chatbot learns everything from its data and human-to-human dialogues, the details of which are fed by machine learning codes.

Thanks to machine learning, chatbots can now be trained to develop their consciousness, and you can teach them to converse with people as well. One of the general reasons why chatbots have made such prominence in the market is because of their ability to drive a human to human conversations. However, all the tricks pulled up a chatbot depends on the datasets and algorithms used. The more datasets you have, the better is the effectiveness of machine learning and the more conversational chatbot you’ll develop.

Before we get into how to develop a machine learning chatbot, we need to know a little about the design concepts.

Chatbots use data as fuel, which, in turn, is provided by machine learning.

However, feeding data to a chatbot isn’t about gathering or downloading any large dataset; you can create your dataset to train the model. Now, to code such a chatbot, you need to understand what its intents are.

Wondering what does intent mean?

The intent is the intention of the user behind creating a chatbot. It denotes the idea behind each message that a chatbot receives from a particular user. So, when you know the group of customers you want the chatbot to interact with, you possess a clearer idea of how to develop a chatbot, the type of data that it encompasses, and code a chatbot solution that works. The intent of a chatbot varies from one solution to another.

That said, it is necessary to understand the intent behind your chatbot in relevance to the domain that you are creating it for.

For example, if you are building a Shopify chatbot you will intend to provide a seamless experience for all the customers visiting your website or app. By using correct machine learning for your chatbot will not only improve the customer experiences but will also enhance your sales.

Thinking about why intent is essential?

It is because intent answers questions, search for the customer base, and perform actions to continue conversations with the user. A chatbot needs to understand the intent behind a user’s query. Once you know the idea behind a question, responding to it becomes easy. And that defines the gist of the human-human conversation.

Next question, how can you make your chatbot understand the intent of a user and provide accurate answers at the same time?

Well, you can define different intents and make training samples for those intents, and then train a chatbot based on that model using the particular training sample data.

Another pivotal question to address is how to develop a chatbot machine learning.

Or exactly how does it chatbot service work for your business?

Well, the answer is quite simple.

When you are creating a chatbot, your goal should be only towards building a product that requires minimal or no human interference.

The following steps detail the development process for a deep learning chatbot.

The first step to any machine learning related process is to prepare data. You can use thousands of existing interactions between customers and similarly train your chatbot. These data sets need to be detailed and varied, cover all the popular conversational topics, and include human interactions. The central idea, there need to be data points for your chatbot machine learning. This process is called data ontology creation, and your sole goal in this process is to collect as many interactions as you can.

Not a mandatory step, but depending on your data source, you might have to segregate your data and reshape it into single rows of insights and observations. These are called message-response pairs added to a classifier.

The central idea of this conversation is to set a response to a conversation. Post that, all of the incoming dialogues will be used as textual indicators, predicting the response of the chatbot in regards to a question.

Perhaps, you need to set certain restrictions when creating this message-to-message board, like the conversation has to be between two people only, individual messages sent can be assigned a collective unit, and the response to a message must come within 5 minutes.

Once you have reformed your message board, the conversation would look like a genuine conversation between two humans, nullifying the machine aspect of a chatbot.

All in all, post data collection, you need to refine it for text exchanges that can help you chatbot development process after removing URLs, image references, stop words, etc. Moreover, the conversation pattern you pick will define the chatbot’s response system. So, you need to precise in what you want it to talk about and in what tone.

Pre-processing is a state in which you teach your chatbot the nuances of the English Language, keeping in mind the form of English practiced in the region while taking special note of the grammar, spelling, punctuations, notations, etc. So, the chatbot could respond to questions that might be grammatically incorrect by understanding the meaning behind the context.

This process involves several sub-processes such as tokenizing, stemming, and lemmatizing of the chats. The meaning of this process in layman’s language is to refine the chatbots for their readability quotient through machine learning features. In this step, you need to employ several tools all to process the data collected, create parse trees of the chats, and improve its technical language through Machine Learning.

Once you’re collected, refined, and formatted the data, you need to brainstorm as to the type of chatbot you want to develop.

Broadly, there are two different types of chatbots:

Generative chatbot: Dependent on the Generative model, this chatbot type doesn’t use any conversational repository. It is an advanced form of chatbot employing Machine Learning to respond to user queries. Most of the modern chatbots function as per the generative model. Therefore, they can answer almost all types of questions. Moreover, they cover the human quotient in their conversations. Therefore, a chatbot deep learning is more adaptable to the queries of its customers but should not be mistaken to imitate humanness in their conversation patterns. For initial chatbot developers, perfecting their art of chatbot development using this model is a tedious task and requires years of Machine Learning studies.

Retrieval-based chatbot: This type of chatbot uses a pre-defined repository to solve queries. These can only answer some questions and can provide the same answer for two different questions. However, you need to select the response system for the chatbot to comply. A retrieval-model based chatbot seldom makes any mistakes since it consults a database for user questions and provides answers accordingly. That said, it has its limitations since it cannot answer rigid questions and might not seem human. Most of the websites disguise a retrieval chatbot as their live agent since they are simple to code and create. Although traditional, such a chatbot does keep track of a user’s previous messages but can only answer questions that are straightforward and not complicated.

Word vectors include popular acronyms like LOL, LMAO, TTYL, etc. These are not a part of any conversation datasets but majorly used on social media and other personal forms of conversation.

You can create your list of word vectors or look for tools online that can do it for you. Developed chatbot using deep learning python use the programming language for these word vectors.

For this step, you need someone well-versed with Python and TensorFlow details. To create a seq2seq model, you need to code a Python script for your machine learning chatbot. You can even outsource Python development module to a company offering such services. Once you know how to do so, you can use the TensorFlow function.

Post developing a Seq2Seq model, track the training process of your chatbot. You can study your chatbot at different corners of the input string, test their outputs to specific questions about your business, and improve the structure of the chatbot in the process.

With time, chatbot deep learning will be able to complete the sentences while following the orders of spelling, grammar, and punctuation.

When you’ve fed data to the chatbot, tested them as per the Seq2Seq model, you need to launch it at a location where it can interact with people.

Now, you have two options over here: either you can launch your website on the app or website or soft launch it only to a group of people who will interact with the chatbot and test its answering and response abilities.

Set up a server, install Node, create a folder, and commence your new Node project. If required, install the other Node dependencies as well. The following code from HackerNoon will help you to install the needed Node dependencies and parameters. Set up the chatbot as per the mentioned comments and customize it accordingly.

|

npm install express request body-parser –save The next step is to create an index.js file and authenticate the bot by: ‘use strict’ const express = require(‘express’) const bodyParser = require(‘body-parser’) const request = require(‘request’) const app = express() app.set(‘port’, (process.env.PORT || 5000)) // Process application/x-www-form-urlencoded app.use(bodyParser.urlencoded({extended: false})) // Process application/json app.use(bodyParser.json()) // Index route app.get(‘/’, function (req, res) { res.send(‘Hello world, I am a chat bot’) }) // for Facebook verification app.get(‘/webhook/’, function (req, res) { if (req.query[‘hub.verify_token’] === ‘my_voice_is_my_password_verify_me’) { res.send(req.query[‘hub.challenge’]) } res.send(‘Error, wrong token’) }) // Spin up the server app.listen(app.get(‘port’), function() { console.log(‘running on port’, app.get(‘port’)) }) Create a file and name it Procfile. Paste the following in it: web: node index.js After committing all the code with Git, you can create a new Heroku instance by: git init git add . git commit –message “hello world” heroku create git push heroku master |

Probably one of the tensest and stressful situations for a developer, the final step is to test your chatbot machine learning and evaluate its success in the market. You need to assess its conversational model and check the chatbot on the following targets:

You can test the chatbot’s responses to the said target metrics and correlate with the human judgment of the appropriateness of the reply provided in a particular context. Wrong answers or unrelated responses receive a low score, thereby requesting the inclusion of new databases to the chatbot’s training procedure.

Once you have interacted with your chatbot machine learning, you will gain tremendous insights in terms of improvement, thereby rendering effective conversations. Adding more datasets to your chatbot is one way you can improve your conversational skills and provide a variety of answers in response to queries based on the scenarios.

A chatbot should be able to differentiate between conversations with the same user. For that, you need to take care of the encoder and the decoder messages and their correlation. Add hyperparameters like LSTM layers, LSTM units, training iterations, optimizer choice, etc., to it.

You might think that chatbot machine learning is a powerful product that can answer several questions, particularly for people who lack a human companion in their lives.

However, the truth is that machine learning chatbots are still not ready to comply with the biological mechanism of humans.

There are still several pitfalls associated with chatbots and their evolution. Machine learning, at best, can train chatbots to recreate human conversations, but it can’t make it entirely human. Despite several types of research in the field, a chatbot’s neural dialog is not ready to talk to them in an open-domain. But, chatbots are great for closed-domain chats such as technical answers, Q&As, customer support, etc. However, humans would love to talk to someone supportive, fascinating, and always present.

Chatbots are an emerging technology for the future. As systems become more complicated, the software undergoes more innovation, and developers devise better coding languages, chatbot machine learning will be purposeful. There are several noteworthy chatbots in the market like Amazon Alexa, Google Home, and IBM Watson that cluster millions of pieces of data together require a lot of hard work.

Artificial intelligence and machine learning are radically evolving, and in the coming years, chatbots will too. With machine learning chatbots, you will be able to resolve customer queries faster and better.

Leave a Reply